Chi Han (UIUC)- Internal Workings of Foundation Models: Diagnosing and Adapting Internal Representations

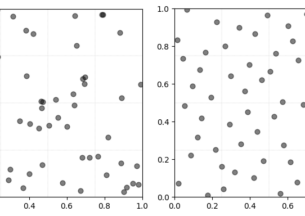

Abstract: While foundation models (FMs) continue to revolutionize natural language processing and AI applications, tracing, locating, and precisely addressing their limitations remains a major challenge. The development of future language models would greatly benefit from a structural understanding of FMs. This presentation brings together several recent papers that systematically explain the internal representations of FMs from both theoretical and empirical perspectives. These works offer preliminary characterizations of the roles and adaptation of internal components: (1) how positional representations hold clues for resolving the context length limitations of FMs, (2) how word representations can be used as steers for generation control, and (3) how cross-modal representations can be best aligned for scientific discovery. Together, they provide insights into addressing inherent limitations of FMs in a principled and efficient way, and point to a promising future of developing a modular “anatomy” for foundation models.

Speakers

Chi Han

Chi Han is currently a final-year Computer Science Ph.D. student in the NLP group at the University of Illinois Urbana-Champaign (UIUC), under the advisory of Prof. Heng Ji. Before joining UIUC, he was an undergraduate student at Tsinghua University, China, in the Yao Class program. He visited the CoCoSci Lab at the Massachusetts Institute of Technology (MIT) during his undergraduate studies. He has first-authored papers in top conferences, including NeurIPS, ICLR, ACL, and NAACL, and received first-authored outstanding paper awards in NAACL 2024 and ACL 2024, and received IBM PhD Fellowship and Amazon AICE PhD Fellowship. His research interests are centered around a theoretical understanding of representations in foundation models (FMs), with the aim of providing insights and tools for efficient, controllable, and interpretable foundation models.