Peter West (U of B.C.)- Can Helpful Assistants be Unpredictable? Limits of Aligned LLMs

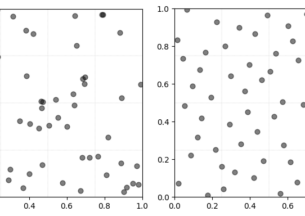

Abstract: The majority of public-facing language models have undergone some form of alignment–a family of techniques (e.g. reinforcement learning from human feedback) which aim to make models safer, more honest, and better at following instructions. In this talk, I will investigate the downsides of aligning LLMs. While the process improves model performance across a broad range of benchmark tasks, particularly those for which a “correct” answer is clear, it seems to mitigate some of the most interesting aspects of LLMs, including unpredictability and generation of text that humans find creative.

Speakers

Peter West

Peter is an Assistant Professor at UBC and a recent postdoc at the Stanford Institute for Human-Centered AI working in Natural Language Processing. His research broadly studies the capabilities and limits of large language models (and other generative AI systems). His work has been recognized with multiple awards, including best method paper at NAACL 2022, outstanding paper at ACL 2023, and outstanding paper at EMNLP 2023