UChicago Students Received ACM EuroSys Best Paper for CacheBlend, a Game-Changer in AI Speed and Precision

Imagine a world where interacting with AI feels intuitive and immediate, just like a conversation with a friend. That vision is becoming a reality thanks to CacheBlend, a revolutionary system developed by Assistant Professor Junchen Jiang and the LMCache Lab at the University of Chicago’s Department of Computer Science. This breakthrough promises to make AI responses faster and more precise, unlocking new possibilities in how we use technology in everyday life.

CacheBlend tackles a common challenge in AI: slow responses and errors that can hinder user experience. By making thoughtful improvements in how AI manages and processes information, this system significantly reduces response times without cutting corners on answer quality. It’s a development that goes beyond technical benefits, enhancing areas where quick and accurate information is invaluable.

“A large language model (LLM) has memory known as KV cache — a tensor-shaped data structure, each encoding the knowledge of a given piece of text after the LLM processes it,” explained Jiang. “Being able to store and reuse such memory (or KV caches) can drastically reduce the amount of computation. Traditionally, the memory of a text can only be reused when the text is at the prefix of a query, precluding its use in popular applications like RAG and Agent. CacheBlend solves this challenge by enabling the memory of a text wherever the text appears in the input. The key insight is that the KV cache of a text only needs to be incrementally updated to cope with its arbitrary position in the query.”

What sets CacheBlend apart is its smart approach to handling information that traditional systems often struggle with. Unlike previous methods, CacheBlend streamlines how AI uses memory and resources to deliver responses more swiftly and accurately. This efficiency results in smoother interactions for users who rely on AI for immediate advice and information, enhancing operational effectiveness.

Tests on various datasets have demonstrated CacheBlend’s ability to reduce delays and improve system efficiency significantly. These advancements not only make a difference in technology circles but also show promise for enhancing everyday functions across sectors. By facilitating faster and clearer communication, CacheBlend supports personal and professional development in environments where time-sensitive decisions are critical.

Tests on various datasets have demonstrated CacheBlend’s ability to reduce delays and improve system efficiency significantly. These advancements not only make a difference in technology circles but also show promise for enhancing everyday functions across sectors. By facilitating faster and clearer communication, CacheBlend supports personal and professional development in environments where time-sensitive decisions are critical.

CacheBlend doesn’t just exist on paper; it’s actively shaping the real-world landscape of AI. Integrated into the open-source LMCache project, which originated in Jiang’s lab but has evolved into a community-driven initiative, CacheBlend is widely used across industries. This system has become the official open-source KV caching layer in major organizations such as Red Hat, IBM, Google, and CoreWeave. Ion Stoica, a professor at UC Berkeley, remarked, “LMCache, a project within the vLLM ecosystem, demonstrates how academic research can drive real-world impact through open-sourcing advanced system design and algorithms. Its implementation provides a clear roadmap for bridging the gap between state-of-the-art ML systems research and enterprise-grade LLM deployment.”

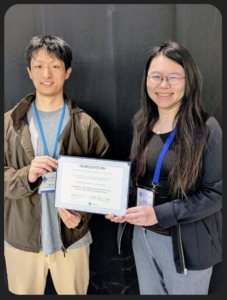

CacheBlend’s introduction into the AI realm has not only sparked excitement but also garnered prestigious recognition. Earlier this year, Assistant Professor Junchen Jiang and his team were honored with the Best Paper Award at the ACM EuroSys 2025 conference—an accolade reserved for only one or two outstanding papers amid hundreds of entries.

CacheBlend’s introduction into the AI realm has not only sparked excitement but also garnered prestigious recognition. Earlier this year, Assistant Professor Junchen Jiang and his team were honored with the Best Paper Award at the ACM EuroSys 2025 conference—an accolade reserved for only one or two outstanding papers amid hundreds of entries.

This award illustrates the system’s potential, reflecting both its technical skill and its capacity to positively affect the future of AI applications. Such recognition highlights CacheBlend’s dual impact: advancing technological innovation while providing societal benefits by making AI systems more efficient and trustworthy.

Looking ahead, CacheBlend’s open-source availability encourages global collaboration, inviting developers to contribute to ongoing improvements. This shared effort promises to inspire further advancements, ensuring AI technology continues to meet diverse human needs effectively. The project can be explored further on GitHub.